WILDFIRE

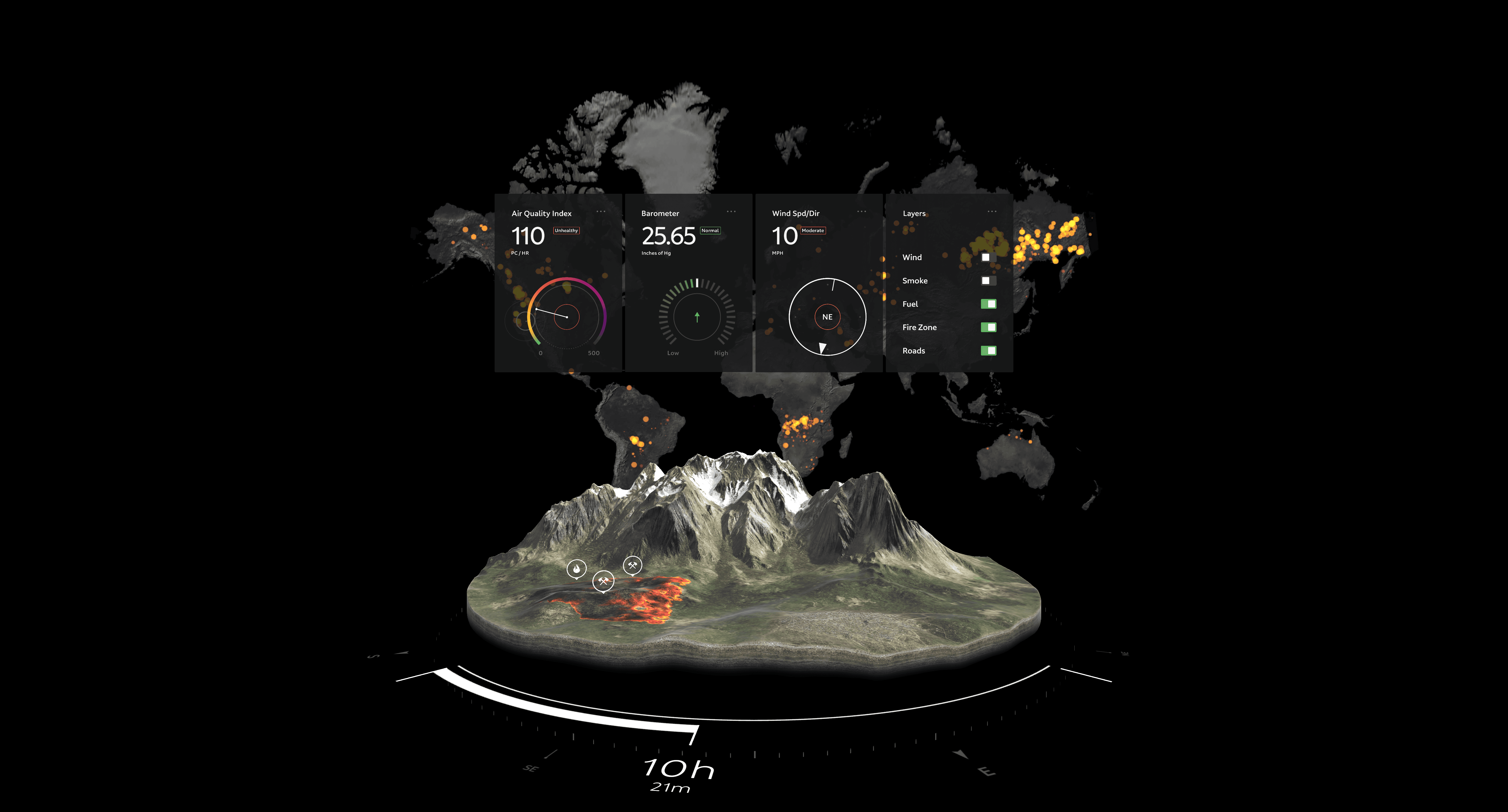

At Magic Leap, I was entrusted with the responsibility of crafting an intuitive and immersive intro for "WildFire," an experience designed to showcase the advanced capabilities of the then-new Magic Leap 2 headset. "WildFire" offers a holistic, command and control viewpoint of global wildfires, merging intricate data visualization with real-time, spatial Augmented Reality interactions.

Technically, achieving this visual feat involved multiple layers of sophisticated development:

Satellite Data Utilization: NASA's high-resolution satellite scans provided detailed topographic maps. These scans were pivotal for height, color data, and wildfire data directly from NASA's MODIS system aboard the Terra and Aqua satellites.

Procedural Sphere Generation: Designed in C#, the dynamic resolution quad sphere materialized procedurally. Crucially, its UV generation facilitated the globe's flawless transformation into the wall map, enabling a consistent shader use.

Custom Shader Development: A tailored shader incorporated all topographic data images. Features included map wipe effects, performance-enhancing faked lighting, and more.

Data Processing: With extensive data ranging from colossal wildfires to minor microwave fires, a considerable preprocessing step was needed. This reduced hundreds of thousands of data points to a more manageable ten thousand, subsequently converted into a graphic buffer format.

Parallel Graphics Buffer: To visualize this massive dataset, and given our project timeline, we channeled this data through a parallel graphics buffer fed directly into Unity’s VFX graph.

The latest version of the application can be accessed here:

https://ml2-developer.magicleap.com/downloads